Imagine if a project has the requirement to set up various different technologies like a web server using NodeJS, MongoDB as database and the frontend using some other technology. There are a lot of issues developing an application stack with all these different components. Compatibility with the underlying OS is an issue as we have to ensure that all these different services are compatible with each other and check the compatibility between the services, the libraries and dependencies on the OS issues as one service may require one portion of a dependent library version while another service may require another version and with time the architecture of the application changes.

Upgrading to newer versions of these components or changing the database structure and every time something changed we had to go through the same process of checking compatibility between these various components. Every time a new developer is on board, It gets really difficult to set up a new environment. These developers need to follow a large set of instructions to finally set up their environment.

Why do we need Docker?

So there arises the need for something that would help us with the compatibility issue and something that will allow us to modify or change these components without affecting the other components and even modify the underlying operating systems as required. Here, comes the need for Docker.

What is Docker?

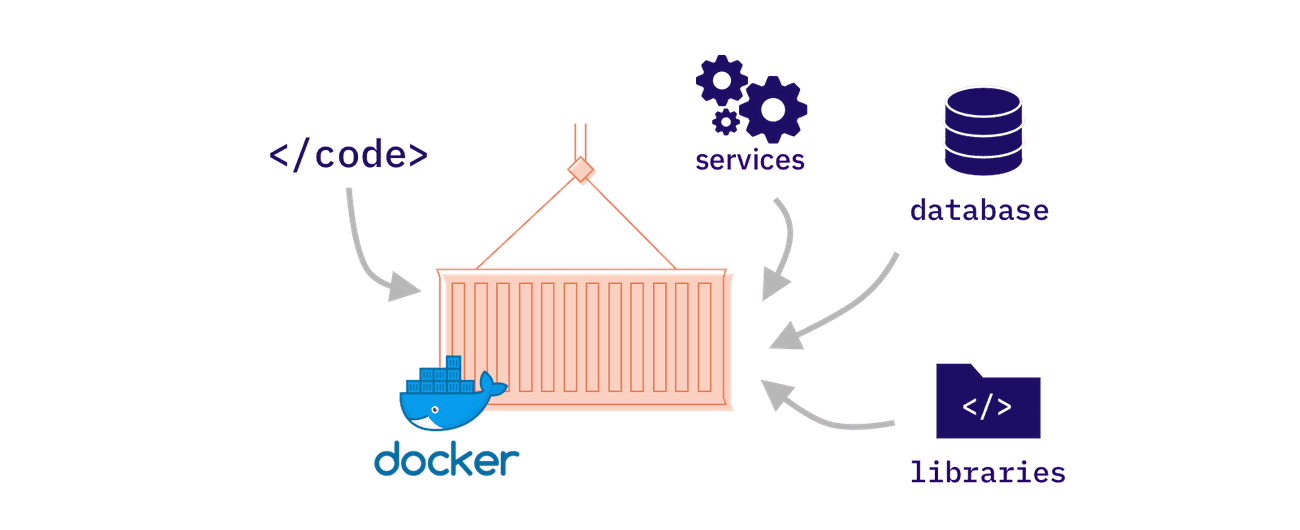

Docker is a Linux-based, open-source containerization platform that allows us to create, deploy, and manage containerized applications.

A developer defines all the application’s dependencies in a Dockerfile which is then used to build Docker images that defines a Docker container. Doing this ensures that your application will run in any environment. So, you just need to build the docker configuration once and a simple following command-

docker run

This way docker helps in running each component in a separate container with all its own dependencies and libraries all on the same OS within separate environments or containers.

Docker Architecture

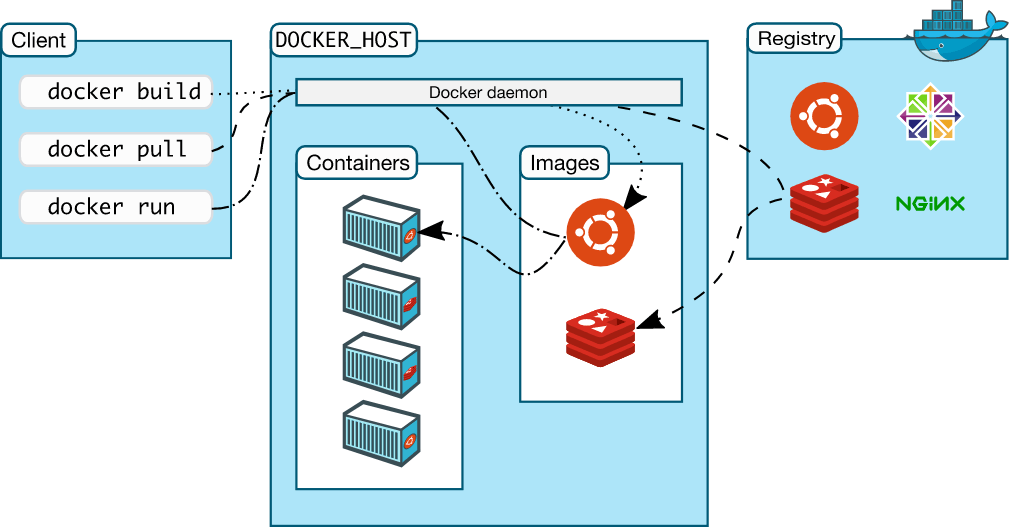

Docker comprises the following different components within its core architecture:

Docker Daemon

It is basically the server that runs on the host machine. It is responsible for building and managing Docker images.

Docker Client

It is a command-line interface (CLI) for sending instructions to the Docker Daemon using special Docker commands.

REST API

It acts as a bridge between the daemon and the client. Any command issued using the client passes through the API to finally reach the daemon.

According to the official documentaion,

Docker uses a client-server architecture. The Docker client talks to the Docker daemon, which does the heavy lifting of building, running, and distributing your Docker containers.

Dockerfile

It is a text document that contains all the steps and instructions to build an image. Docker can build images automatically by reading the instructions from a Dockerfile.

Docker Images

Images are multi-layered self-contained files that act as a package or a template that contains a set of commands for creating a container that can run on the Docker platform. It provides a convenient way to package up applications and preconfigured server environments, which you can use for your own private use or share publicly with other Docker users. Layers receive an ID, calculated via a SHA 256 hash of the layer contents.

Docker Containers

Containers are running instances of docker images that have completely isolated environments with their own processes.

Docker Registries

A Docker registry stores Docker images. Docker Hub is a public registry that anyone can use, and Docker is configured to look for images on Docker Hub by default. You can even run your own private registry.

How does Docker work?

Operating systems like Ubuntu, Fedora, CentOS, etc, they all consist of:

OS Kernel: Responsible for communication with the underlying hardware.

Set of softwares: These softwares make the OS different by having different UI, drivers, file managers, tools, etc. So we have a common Linux kernel shared across some custom software that differentiates the OS from each other. > Docker containers share the OS kernel. This means if you have a Ubuntu OS with docker installed on it, then Docker can run any different OS on top of it as long as they all are based on the same kernel. In this case, it's Linux. Each docker container just has an additional software that makes these OS different. If an OS doesn't share the same kernel as Windows then you won't be able to run a windows based container on a Docker host with Linux on it. For that, you require docker on a windows server.

By installing docker on Windows and then running a Linux container on it, you are not really not running a Linux container on windows. Windows runs a Linux container on a Linux virtual machine under the host.

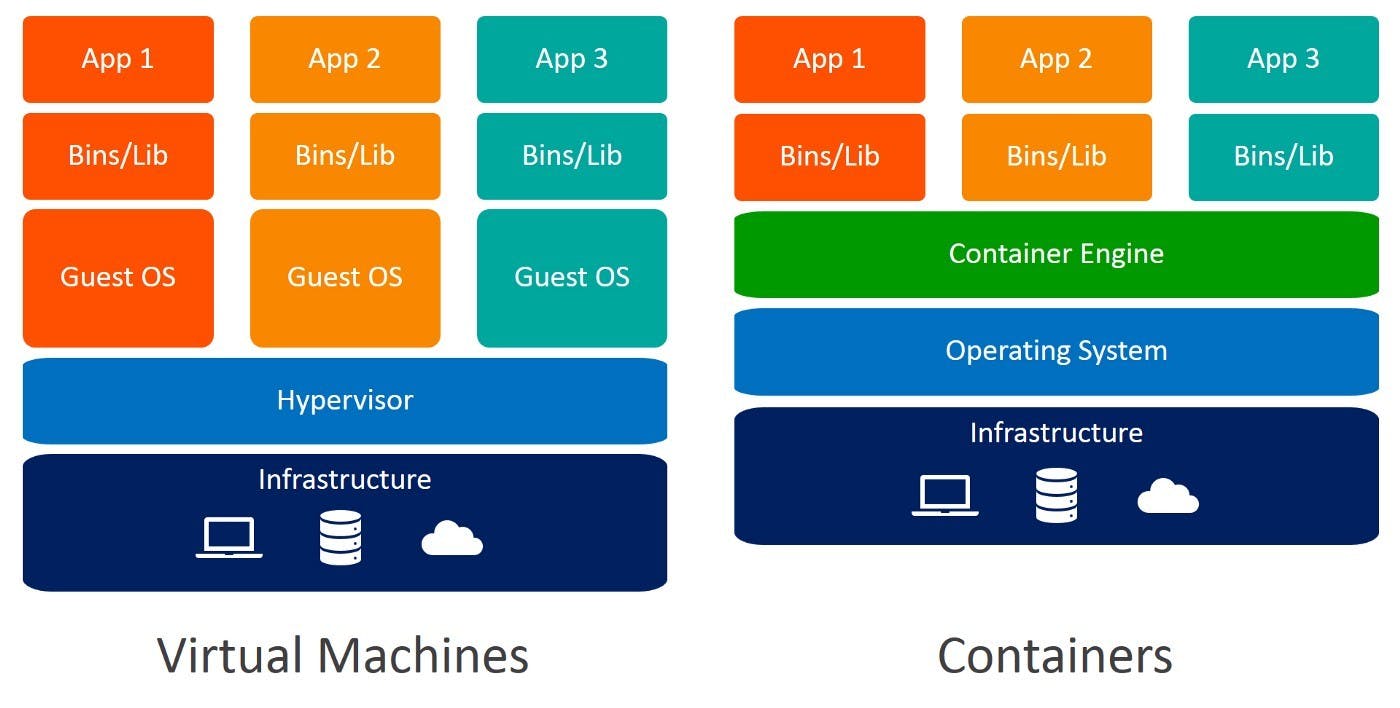

Containerization vs Virtualization

Virtualization enables you to run multiple operating systems on the hardware of a single physical server, while containerization enables you to deploy multiple applications using the same operating system on a single virtual machine or server. Virtual machines are great for supporting applications that require an operating system’s full functionality when you want to deploy multiple applications on a server, or when you have a wide variety of operating systems to manage.

Containers are a better choice when your biggest priority is to minimize the number of servers you’re using for multiple applications. Containers are an excellent choice for tasks with a much shorter lifecycle. With their fast set up time, they are suitable for tasks that may only take a few hours. Virtual machines have a longer lifecycle than containers, and are best used for longer periods of time.

Basic Commands

$ docker pull ubuntu:18.04 // 18.04 is version

$ docker images // Lists Docker Images

$ docker run image // Creates a container out of an image

$ docker rmi image // Deletes a Docker Image if no container is using it.

$ docker rmi $(docker images -q) // Deletes all Docker images

Docker Hub

Docker Hub is a hosted repository service provided by Docker for finding and sharing container images with your team.

For more info, click here.

Installation

Docker runs flawlessly on all three major platforms, Mac, Windows, and Linux. For download and installation refer this.

Kubernetes

Having too many containers makes managing them difficult.

Kubernetes (K8s) is an open-source container orchestration system for automating software deployment, scaling, and management. It provides an API to control how and where those containers will run.

It allows you to run your Docker containers and workloads and helps you to tackle some of the operating complexities when moving to scale multiple containers, deployed across multiple servers.

👋 Enjoyed this blog?

Reach out in the comments below or on LinkedIn to let me know what you think of it.

For more updates, do follow me here :)